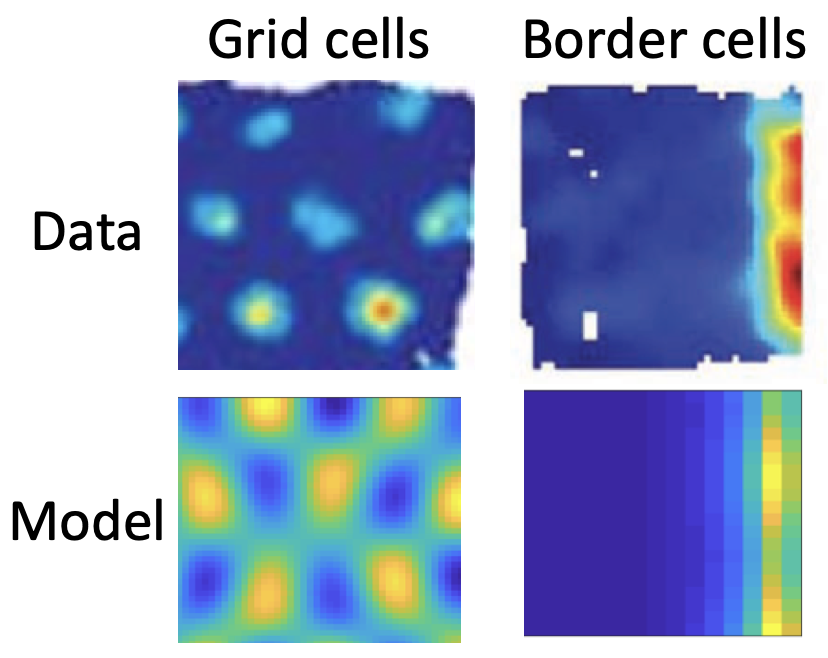

Solving complex planning problems efficiently and flexibly requires reusing expensive previous computations. The brain can do this, but how? We have developed a model, termed linear reinforcement learning, that addresses this question. The model reuses previous computations more nimbly in a way to enable biologically-realistic, flexible choice at the expense of specific, quantifiable control costs. The model produces similar flexibilities and inflexibilities as animals and unifies a number of hitherto distinct areas, including planning, entorhinal maps (grid cells and border cells) and cognitive control.

Related Publications:

Nature Communications, 2021

bioRxiv, 2024

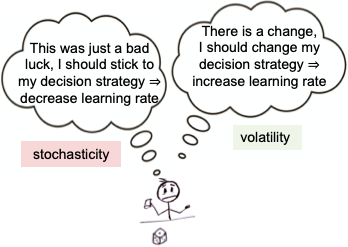

A key insight from Bayesian machine learning is that adaptive learning requires distinguishing between two types of noise: speed of change or volatility and moment-to-moment stochasticity. Although this is a computationally difficult problem, the brain can solve it; the question is, how? We addressed this issue by developing a model that learns to distinguish between volatility and stochasticity solely through observations over time. The model has two modules: a stochasticity module and a volatility module. These modules must compete within the model to explain experienced noise. This enables us to explain aspects of human and animal data across a wide range of seemingly unrelated neuroscientific and behavioral phenomena. It also provides a rich set of hypotheses about pathological learning in psychiatric conditions, where damage in one module impacts the behavior with regard to the other factor because the model misattributes all noise to the remaining module.

Related Publications:

Nature Communications, 2024

Nature Communications, 2021